目录

- 前言

- mask模式

- PASCAL-VOC2012

- 下载

- 数据集简介

- 数据加载(dataloader)

- CamVid

- 下载

- 数据集简介

- 数据加载(dataloader)

- Cityscape

- 下载

- 数据集简介

- 数据集处理

- 数据加载(dataloader)

- ADE20K

- 下载

- 数据集简介

- 数据加载(dataloader)

- COCO2017

- 下载

- 数据集简介

- 数据集处理

- 数据加载(dataloader)

- 结语

- 参考内容

前言

本篇向大家介绍下语义分割任务中的常用的数据集。本文将会向大家介绍常用的一些数据集以及数据集的处理加载方式。本篇文章收录于语义分割专栏,如果对语义分割领域感兴趣的,可以去看看本专栏,会对经典的模型以及代码进行详细的讲解哦!其中会包含可复现的代码!

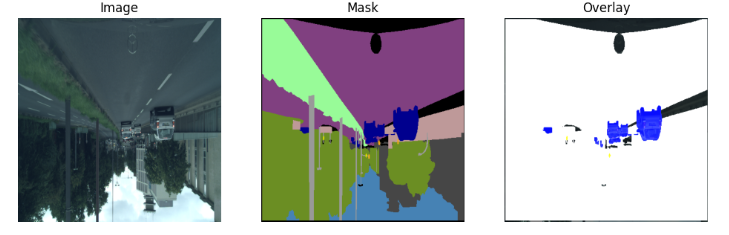

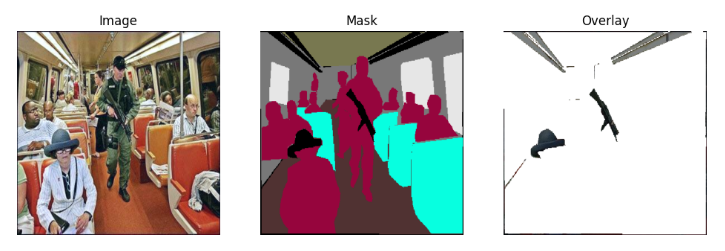

mask模式

在讲数据集前,首先向大家介绍一下我们语义标注文件的格式。

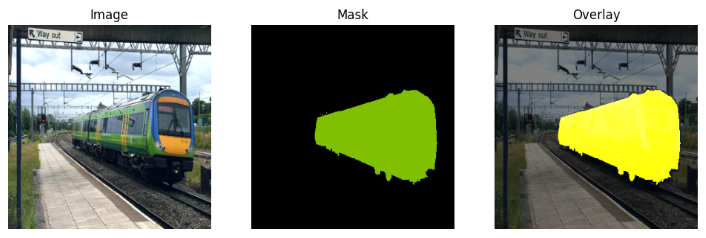

在语义分割中,标注文件一般都是P模式(调色板模式)或者是L模式(灰度模式)。如下图所示,左边就是L模式的图片展示,右边就是P模式的图片展示。

其实两种模式的内容是相同的,都是单通道的,但是其所表示的含义不同。P模式中的数字代表的是类别到调色板的映射,什么意思呢?就是8位最多有256个(0-255),其中每一个都映射了调色版中的一个颜色,当然最多也就只能映射256个颜色了,每个颜色代表了一个类别。P模式的标注图能够更直观的让我们看到图像的标注。而L模式中的数字代表的就是灰度值,同样的每个灰度值代表了一个类别。

我们可以通过如下方式来查看图像的模式:- print(Image.open('image.png').mode)

- # 读方式一:

- label = cv2.imread(label_path, 0)

- # 读方式二:

- label = np.asarray(Image.open(label_path), dtype=np.int32)

- # 写方式一:

- cv2.imwrite('gray_image.png', gray_image)

- # 写方式二:

- gray_image.save('gray_image.png')

- #读

- label = np.asarray(Image.open(label_path), dtype=np.int32)

- #写

- def save_colored_mask(mask, save_path):

- lbl_pil = Image.fromarray(mask.astype(np.uint8), mode="P")

- colormap = imgviz.label_colormap()

- lbl_pil.putpalette(colormap.flatten())

- lbl_pil.save(save_path)

模式通道数每个像素的含义适用于常用读取方式备注P (Palette)1通道(索引)类别ID ➔ 查调色板得到颜色语义分割标注(类别索引型)PIL.Image.open()像素值是类别索引,调色板映射成RGBL (Luminance)1通道(灰度值)0~255 灰度值灰度图、深度图、标签图PIL.Image.open().convert('L') or cv2.imread(..., 0)直接表示亮度或类别ID,无调色板RGB3通道真实颜色(R,G,B各0~255)彩色图片、可视化图像cv2.imread() / PIL.Image.open().convert('RGB')每个像素是直接的颜色值PASCAL-VOC2012

下载

数据集名称:PASCAL-VOC2012

数据集下载地址:The PASCAL Visual Object Classes Challenge 2012 (VOC2012)

在这里下载哈,2GB的那个。

数据集简介

VOC2012 数据集是Pascal Visual Object Classes (VOC) 持续的竞赛和挑战的一部分,广泛用于图像分类、目标检测、语义分割等任务。VOC2012 数据集的语义分割任务包含20个类别,主要用于评估物体级别的分割精度。

数据特点:

- 图像数量:训练集有1,464张图像,验证集有1,449张图像,测试集有1,456张图像。

- 类别:包括20个物体类别,如人、动物、交通工具、家具等,且每个图像都标注有相应的像素级标签。

- 格式:每张图像都附有一个对应的标注图(标签图),其每个像素值对应物体类别的ID。

数据加载(dataloader)

VOC2012的标注就是P模式的- import torch

- import numpy as np

- from PIL import Image

- from torch.utils.data import Dataset, DataLoader

- import os

- import random

- import torchvision.transforms as T

- VOC_CLASSES = [

- 'background','aeroplane','bicycle','bird','boat','bottle','bus',

- 'car','cat','chair','cow','diningtable','dog','horse',

- 'motorbike','person','potted plant','sheep','sofa','train','tv/monitor'

- ]

- VOC_COLORMAP = [

- [0, 0, 0], [128, 0, 0], [0, 128, 0], [128, 128, 0],

- [0, 0, 128], [128, 0, 128], [0, 128, 128], [128, 128, 128],

- [64, 0, 0], [192, 0, 0], [64, 128, 0], [192, 128, 0],

- [64, 0, 128], [192, 0, 128], [64, 128, 128], [192, 128, 128],

- [0, 64, 0], [128, 64, 0], [0, 192, 0], [128, 192, 0], [0, 64, 128]

- ]

- class VOCSegmentation(Dataset):

- def __init__(self, root, split='train', img_size=320, augment=True):

- super(VOCSegmentation, self).__init__()

- self.root = root

- self.split = split

- self.img_size = img_size

- self.augment = augment

- img_dir = os.path.join(root, 'JPEGImages')

- mask_dir = os.path.join(root, 'SegmentationClass')

- split_file = os.path.join(root, 'ImageSets', 'Segmentation', f'{split}.txt')

- if not os.path.exists(split_file):

- raise FileNotFoundError(split_file)

- with open(split_file, 'r') as f:

- file_names = [x.strip() for x in f.readlines()]

- self.images = [os.path.join(img_dir, x + '.jpg') for x in file_names]

- self.masks = [os.path.join(mask_dir, x + '.png') for x in file_names]

- assert len(self.images) == len(self.masks)

- print(f"{split} set loaded: {len(self.images)} samples")

- self.normalize = T.Normalize(mean=[0.485, 0.456, 0.406],

- std=[0.229, 0.224, 0.225])

- def __getitem__(self, index):

- img = Image.open(self.images[index]).convert('RGB')

- mask = Image.open(self.masks[index]) # mask为P模式(0~20的类别)

- # Resize

- img = img.resize((self.img_size, self.img_size), Image.BILINEAR)

- mask = mask.resize((self.img_size, self.img_size), Image.NEAREST)

- # 转Tensor

- img = T.functional.to_tensor(img)

- mask = torch.from_numpy(np.array(mask)).long() # 0~20

- # 数据增强

- if self.augment:

- if random.random() > 0.5:

- img = T.functional.hflip(img)

- mask = T.functional.hflip(mask)

- if random.random() > 0.5:

- img = T.ColorJitter(brightness=0.2, contrast=0.2, saturation=0.2, hue=0.2)(img)

- img = self.normalize(img)

- return img, mask

- def __len__(self):

- return len(self.images)

- def get_dataloader(data_path, batch_size=4, img_size=320, num_workers=4):

- train_dataset = VOCSegmentation(root=data_path, split='train', img_size=img_size, augment=True)

- val_dataset = VOCSegmentation(root=data_path, split='val', img_size=img_size, augment=False)

- train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- val_loader = DataLoader(val_dataset, shuffle=False, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- return train_loader, val_loader

CamVid

下载

数据集名称:CamVid

数据集下载地址:Object Recognition in Video Dataset

在这里进行下载,CamVid数据集有两种,一种是官方的就是上述的下载地址的,总共有32种类别,划分的会更加的细致。但是一般官网的太难打开了,所以我们可以通过Kaggle中的CamVid (Cambridge-Driving Labeled Video Database)进行下载。

还有一种就是11类别的(不包括背景),会将一些语义相近的内容进行合并,就划分的没有这么细致,任务难度也会比较低一些。(如果你在网上找不到的话,可以在评论区发言或是私聊我要取)

数据集简介

CamVid 数据集主要用于自动驾驶场景中的语义分割,包含驾驶场景中的道路、交通标志、车辆等类别的标注图像。该数据集旨在推动自动驾驶系统在道路场景中的表现。

数据特点:

- 图像数量:包括701帧视频序列图像,分为训练集、验证集和测试集。

- 类别:包含32个类别(也有包含11个类别的),包括道路、建筑物、车辆、行人等。

- 挑战:由于数据集主要来自城市交通场景,因此面临着动态变化的天气、光照、交通密度等挑战

数据加载(dataloader)

CamVid我从kaggle中下载的就是RGB的mask图像,所以我们需要先将其转换为单通道mask图像(L模式)- def mask_to_class(mask):

- mask_class = np.zeros((mask.shape[0], mask.shape[1]), dtype=np.uint8)

- for idx, color in enumerate(Cam_COLORMAP):

- color = np.array(color)

- # 每个像素和当前颜色匹配

- matches = np.all(mask == color, axis=-1)

- mask_class[matches] = idx

- return mask_class

- import osfrom PIL import Imageimport albumentations as Afrom albumentations.pytorch.transforms import ToTensorV2from torch.utils.data import Dataset, DataLoaderimport numpy as np# 32类Cam_CLASSES = ['Animal', 'Archway', 'Bicyclist', 'Bridge', 'Building', 'Car', 'CartLuggagePram', 'Child', 'Column_Pole', 'Fence', 'LaneMkgsDriv', 'LaneMkgsNonDriv', 'Misc_Text', 'MotorcycleScooter', 'OtherMoving', 'ParkingBlock', 'Pedestrian', 'Road', 'RoadShoulder', 'Sidewalk', 'SignSymbol', 'Sky', 'SUVPickupTruck', 'TrafficCone', 'TrafficLight', 'Train', 'Tree', 'Truck_Bus', 'Tunnel', 'VegetationMisc', 'Void', 'Wall']# 用于做可视化Cam_COLORMAP = [ [64, 128, 64], [192, 0, 128], [0, 128, 192], [0, 128, 64], [128, 0, 0], [64, 0, 128], [64, 0, 192], [192, 128, 64], [192, 192, 128], [64, 64, 128], [128, 0, 192], [192, 0, 64], [128, 128, 64], [192, 0, 192], [128, 64, 64], [64, 192, 128], [64, 64, 0], [128, 64, 128], [128, 128, 192], [0, 0, 192], [192, 128, 128], [128, 128, 128], [64, 128, 192], [0, 0, 64], [0, 64, 64], [192, 64, 128], [128, 128, 0], [192, 128, 192], [64, 0, 64], [192, 192, 0], [0, 0, 0], [64, 192, 0]]# 转换RGB mask为类别id的函数def mask_to_class(mask):

- mask_class = np.zeros((mask.shape[0], mask.shape[1]), dtype=np.uint8)

- for idx, color in enumerate(Cam_COLORMAP):

- color = np.array(color)

- # 每个像素和当前颜色匹配

- matches = np.all(mask == color, axis=-1)

- mask_class[matches] = idx

- return mask_classclass CamVidDataset(Dataset): def __init__(self, image_dir, label_dir): self.image_dir = image_dir self.label_dir = label_dir self.transform = A.Compose([ A.Resize(448, 448), A.HorizontalFlip(), A.VerticalFlip(), A.Normalize(), ToTensorV2(), ]) self.images = sorted(os.listdir(image_dir)) self.labels = sorted(os.listdir(label_dir)) assert len(self.images) == len(self.labels), "Images and labels count mismatch!" def __len__(self): return len(self.images) def __getitem__(self, idx): img_path = os.path.join(self.image_dir, self.images[idx]) label_path = os.path.join(self.label_dir, self.labels[idx]) image = np.array(Image.open(img_path).convert("RGB")) label_rgb = np.array(Image.open(label_path).convert("RGB")) # RGB转类别索引 label_class = mask_to_class(label_rgb) # Albumentations 需要 (H, W, 3) 和 (H, W) transformed = self.transform(image=image, mask=label_class) return transformed['image'], transformed['mask']def get_dataloader(data_path, batch_size=4, num_workers=4): train_dir = os.path.join(data_path, 'train') val_dir = os.path.join(data_path, 'val') trainlabel_dir = os.path.join(data_path, 'train_labels') vallabel_dir = os.path.join(data_path, 'val_labels') train_dataset = CamVidDataset(train_dir, trainlabel_dir) val_dataset = CamVidDataset(val_dir, vallabel_dir) train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size, pin_memory=True, num_workers=num_workers) val_loader = DataLoader(val_dataset, shuffle=False, batch_size=batch_size, pin_memory=True, num_workers=num_workers) return train_loader, val_loader

Cityscape

下载

数据集名称:Cityscape

数据集下载地址:Download – Cityscapes Dataset

需要注册才能够登录,然后这三个都需要进行下载,因为后续我们数据处理的时候会要用到的。

其中leftImg8bit就是图片文件,gtFine就是精细标注的文件,gtCoarse就是粗略标注的文件。其实我们只需要用到的就是gtFine中的,但是我们后续数据处理的时候需要用到gtCoarse,要不然就会报错。

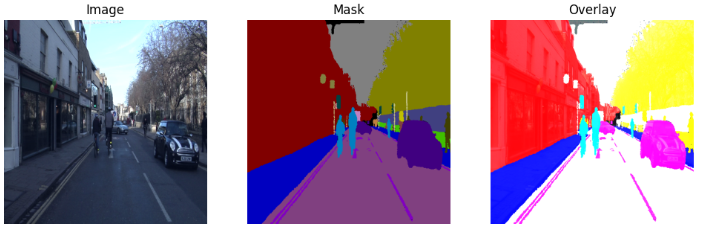

数据集简介

Cityscapes是一个专注于城市街道的语义分割数据集,特别适用于自动驾驶和城市环境中的语义分割任务。它提供了详细的像素级标注,涵盖了各种城市景观,如街道、建筑、交通信号灯等。

数据特点:

- 图像数量:包含5,000张高分辨率图像,其中包括2,975张训练图像,500张验证图像,1,525张测试图像。

- 类别:包含30个类别,其中19个是常见的语义分割类别,如道路、人行道、建筑物、车辆等。

- 挑战:数据集注重高分辨率图像(2048x1024),适用于复杂城市街道场景的分割任务。

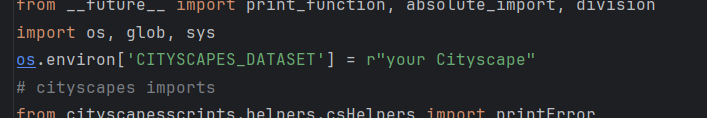

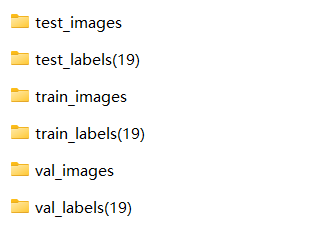

数据集处理

下载好这三个文件之后,我们需要通过代码来生成标注,可以直接下载对应的库,也可以去github上下载对应的工具。- pip install cityscapesscripts

加上这么一句,你的CityScape地址,然后直接运行该文件即可。注意你的地址文件夹下面应该包含gtFine和gtCoarse两个文件夹。- os.environ['CITYSCAPES_DATASET'] = r"your Cityscape"

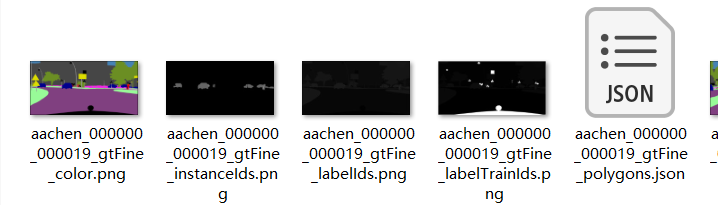

运行之后就可以直接生成标注文件了,注意这里生成的是19个类的标注文件,其实原gtFine文件夹中有标注文件的,只不过是33类别的。我们一般用到的都是19类别的。以labelids结尾的就是33类别的,labelTrainids结尾的就是我们刚刚生成的19类别的标准文件了。

然后我们将数据文件和标注整理下- import os

- import random

- import shutil

- # 处理图片

- dataset_path = r"D:\博客记录\语义分割\data\Cityscape"

- # 原始的train, valid文件夹路径

- train_dataset_path = os.path.join(dataset_path, 'leftImg8bit/train')

- val_dataset_path = os.path.join(dataset_path, 'leftImg8bit/val')

- test_dataset_path = os.path.join(dataset_path, 'leftImg8bit/test')

- # 创建train,valid的文件夹

- train_images_path = os.path.join(dataset_path, 'train_images')

- val_images_path = os.path.join(dataset_path, 'val_images')

- test_images_path = os.path.join(dataset_path, 'test_images')

- # 处理标注

- # 原始的train_label, valid_label文件夹路径

- train_label_path = os.path.join(dataset_path, 'gtFine/train')

- val_label_path = os.path.join(dataset_path, 'gtFine/val')

- test_label_path = os.path.join(dataset_path, 'gtFine/test')

- # 创建train,valid的文件夹

- train_images_label_path = os.path.join(dataset_path, 'train_labels(19)')

- val_images_label_path = os.path.join(dataset_path, 'val_labels(19)')

- test_images_label_path = os.path.join(dataset_path, 'test_labels(19)')

- if os.path.exists(train_images_path) == False:

- os.mkdir(train_images_path)

- if os.path.exists(val_images_path) == False:

- os.mkdir(val_images_path)

- if os.path.exists(test_images_path) == False:

- os.mkdir(test_images_path)

- if os.path.exists(train_images_label_path) == False:

- os.mkdir(train_images_label_path)

- if os.path.exists(val_images_label_path) == False:

- os.mkdir(val_images_label_path)

- if os.path.exists(test_images_label_path) == False:

- os.mkdir(test_images_label_path)

- # -----------------移动文件夹-------------------------------------------------

- for file_name in os.listdir(train_dataset_path):

- file_path = os.path.join(train_dataset_path, file_name)

- for image in os.listdir(file_path):

- shutil.copy(os.path.join(file_path, image), os.path.join(train_images_path, image))

- for file_name in os.listdir(val_dataset_path):

- file_path = os.path.join(val_dataset_path, file_name)

- for image in os.listdir(file_path):

- shutil.copy(os.path.join(file_path, image), os.path.join(val_images_path, image))

- for file_name in os.listdir(test_dataset_path):

- file_path = os.path.join(test_dataset_path, file_name)

- for image in os.listdir(file_path):

- shutil.copy(os.path.join(file_path, image), os.path.join(test_images_path, image))

-

- for file_name in os.listdir(train_label_path):

- file_path = os.path.join(train_label_path, file_name)

- for image in os.listdir(file_path):

- # 查找对应的后缀名,然后保存到文件中

- if image.split('.png')[0][-13:] == "labelTrainIds":

- # print(image)

- shutil.copy(os.path.join(file_path, image), os.path.join(train_images_label_path, image))

- for file_name in os.listdir(val_label_path):

- file_path = os.path.join(val_label_path, file_name)

- for image in os.listdir(file_path):

- if image.split('.png')[0][-13:] == "labelTrainIds":

- shutil.copy(os.path.join(file_path, image), os.path.join(val_images_label_path, image))

- for file_name in os.listdir(test_label_path):

- file_path = os.path.join(test_label_path, file_name)

- for image in os.listdir(file_path):

- if image.split('.png')[0][-13:] == "labelTrainIds":

- shutil.copy(os.path.join(file_path, image), os.path.join(test_images_label_path, image))

数据加载(dataloader)

Cityscapes的标注图像是L模式的- import os

- from PIL import Image

- import albumentations as A

- from albumentations.pytorch.transforms import ToTensorV2

- from torch.utils.data import Dataset, DataLoader

- import numpy as np

- CITYSCAPES_CLASSES = [

- "road", "sidewalk", "building", "wall", "fence", "pole", "traffic light", "traffic sign", "vegetation",

- "terrain", "sky", "person", "rider", "car", "truck", "bus", "train", "motorcycle", "bicycle","background",

- ]

- CITYSCAPES_COLORMAP = [

- [128, 64, 128], # road

- [244, 35, 232], # sidewalk

- [70, 70, 70], # building

- [102, 102, 156], # wall

- [190, 153, 153], # fence

- [153, 153, 153], # pole

- [250, 170, 30], # traffic light

- [220, 220, 0], # traffic sign

- [107, 142, 35], # vegetation

- [152, 251, 152], # terrain

- [70, 130, 180], # sky

- [220, 20, 60], # person

- [255, 0, 0], # rider

- [0, 0, 142], # car

- [0, 0, 70], # truck

- [0, 60, 100], # bus

- [0, 80, 100], # train

- [0, 0, 230], # motorcycle

- [119, 11, 32], # bicycle

- [0, 0, 0],# background

- ]

- class CityScapes(Dataset):

- def __init__(self, image_dir, label_dir):

- self.image_dir = image_dir

- self.label_dir = label_dir

- self.transform = A.Compose([

- A.Resize(448, 448),

- A.HorizontalFlip(),

- A.VerticalFlip(),

- A.Normalize(),

- ToTensorV2(),

- ])

- self.images = sorted(os.listdir(image_dir))

- self.labels = sorted(os.listdir(label_dir))

- assert len(self.images) == len(self.labels), "Images and labels count mismatch!"

- def __len__(self):

- return len(self.images)

- def __getitem__(self, idx):

- img_path = os.path.join(self.image_dir, self.images[idx])

- label_path = os.path.join(self.label_dir, self.labels[idx])

- image = np.array(Image.open(img_path).convert("RGB"))

- label = np.array(Image.open(label_path))

- # Albumentations 需要 (H, W, 3) 和 (H, W)

- transformed = self.transform(image=image, mask=label)

- return transformed['image'], transformed['mask']

- def get_dataloader(data_path, batch_size=4, num_workers=4):

- train_dir = os.path.join(data_path,'train_images')

- val_dir = os.path.join(data_path,'val_images')

- trainlabel_dir = os.path.join(data_path, 'train_labels(19)')

- vallabel_dir = os.path.join(data_path, 'val_labels(19)')

- train_dataset = CityScapes(train_dir, trainlabel_dir)

- val_dataset = CityScapes(val_dir, vallabel_dir)

- train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- val_loader = DataLoader(val_dataset, shuffle=False, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- return train_loader, val_loader

ADE20K

下载

数据集名称:ADE20K

数据集下载地址:ADE20K dataset

在这里进行下载。但是也需要注册什么的比较麻烦。

数据集简介

ADE20k是一个大规模的语义分割数据集,涵盖了各种日常场景,提供了广泛的类标注,用于评估图像分割算法的性能。

数据特点:

- 图像数量:数据集包含20K多张图像,其中包括训练集、验证集和测试集。

- 类别:有150个语义类别,涵盖了物体、场景以及物体的一些具体部分(例如家具、道路、天空等)。

- 挑战:ADE20k是一个多类别、多样化的数据集,标注了大量不同的对象类别,适用于大规模的语义分割研究。

数据加载(dataloader)

ADE20k的标注也是L模式的- import os

- from PIL import Image

- import albumentations as A

- from albumentations.pytorch.transforms import ToTensorV2

- from torch.utils.data import Dataset, DataLoader

- import numpy as np

- ADE_CLASSES = [

- "background", "wall", "building", "sky", "floor", "tree", "ceiling", "road", "bed", "windowpane",

- "grass", "cabinet", "sidewalk", "person", "earth", "door", "table", "mountain", "plant", "curtain",

- "chair", "car", "water", "painting", "sofa", "shelf", "house", "sea", "mirror", "rug",

- "field", "armchair", "seat", "fence", "desk", "rock", "wardrobe", "lamp", "bathtub", "railing",

- "cushion", "base", "box", "column", "signboard", "chest of drawers", "counter", "sand", "sink", "skyscraper",

- "fireplace", "refrigerator", "grandstand", "path", "stairs", "runway", "case", "pool table", "pillow", "screen door",

- "stairway", "river", "bridge", "bookcase", "blind", "coffee table", "toilet", "flower", "book", "hill",

- "bench", "countertop", "stove", "palm", "kitchen island", "computer", "swivel chair", "boat", "bar", "arcade machine",

- "hovel", "bus", "towel", "light", "truck", "tower", "chandelier", "awning", "streetlight", "booth",

- "television receiver", "airplane", "dirt track", "apparel", "pole", "land", "bannister", "escalator", "ottoman", "bottle",

- "buffet", "poster", "stage", "van", "ship", "fountain", "conveyer belt", "canopy", "washer", "plaything",

- "swimming pool", "stool", "barrel", "basket", "waterfall", "tent", "bag", "minibike", "cradle", "oven",

- "ball", "food", "step", "tank", "trade name", "microwave", "pot", "animal", "bicycle", "lake",

- "dishwasher", "screen", "blanket", "sculpture", "hood", "sconce", "vase", "traffic light", "tray", "ashcan",

- "fan", "pier", "crt screen", "plate", "monitor", "bulletin board", "shower", "radiator", "glass", "clock",

- "flag"

- ]

- ADE_COLORMAP = [[0,0,0],

- [120, 120, 120], [180, 120, 120], [6, 230, 230], [80, 50, 50], [4, 200, 3],

- [120, 120, 80], [140, 140, 140], [204, 5, 255], [230, 230, 230], [4, 250, 7],

- [224, 5, 255], [235, 255, 7], [150, 5, 61], [120, 120, 70], [8, 255, 51],

- [255, 6, 82], [143, 255, 140], [204, 255, 4], [255, 51, 7], [204, 70, 3],

- [0, 102, 200], [61, 230, 250], [255, 6, 51], [11, 102, 255], [255, 7, 71],

- [255, 9, 224], [9, 7, 230], [220, 220, 220], [255, 9, 92], [112, 9, 255],

- [8, 255, 214], [7, 255, 224], [255, 184, 6], [10, 255, 71], [255, 41, 10],

- [7, 255, 255], [224, 255, 8], [102, 8, 255], [255, 61, 6], [255, 194, 7],

- [255, 122, 8], [0, 255, 20], [255, 8, 41], [255, 5, 153], [6, 51, 255],

- [235, 12, 255], [160, 150, 20], [0, 163, 255], [140, 140, 140], [250, 10, 15],

- [20, 255, 0], [31, 255, 0], [255, 31, 0], [255, 224, 0], [153, 255, 0],

- [0, 0, 255], [255, 71, 0], [0, 235, 255], [0, 173, 255], [31, 0, 255],

- [11, 200, 200], [255, 82, 0], [0, 255, 245], [0, 61, 255], [0, 255, 112],

- [0, 255, 133], [255, 0, 0], [255, 163, 0], [255, 102, 0], [194, 255, 0],

- [0, 143, 255], [51, 255, 0], [0, 82, 255], [0, 255, 41], [0, 255, 173],

- [10, 0, 255], [173, 255, 0], [0, 255, 153], [255, 92, 0], [255, 0, 255],

- [255, 0, 245], [255, 0, 102], [255, 173, 0], [255, 0, 20], [255, 184, 184],

- [0, 31, 255], [0, 255, 61], [0, 71, 255], [255, 0, 204], [0, 255, 194],

- [0, 255, 82], [0, 10, 255], [0, 112, 255], [51, 0, 255], [0, 194, 255],

- [0, 122, 255], [0, 255, 163], [255, 153, 0], [0, 255, 10], [255, 112, 0],

- [143, 255, 0], [82, 0, 255], [163, 255, 0], [255, 235, 0], [8, 184, 170],

- [133, 0, 255], [0, 255, 92], [184, 0, 255], [255, 0, 31], [0, 184, 255],

- [0, 214, 255], [255, 0, 112], [92, 255, 0], [0, 224, 255], [112, 224, 255],

- [70, 184, 160], [163, 0, 255], [153, 0, 255], [71, 255, 0], [255, 0, 163],

- [255, 204, 0], [255, 0, 143], [0, 255, 235], [133, 255, 0], [255, 0, 235],

- [245, 0, 255], [255, 0, 122], [255, 245, 0], [10, 190, 212], [214, 255, 0],

- [0, 204, 255], [20, 0, 255], [255, 255, 0], [0, 153, 255], [0, 41, 255],

- [0, 255, 204], [41, 0, 255], [41, 255, 0], [173, 0, 255], [0, 245, 255],

- [71, 0, 255], [122, 0, 255], [0, 255, 184], [0, 92, 255], [184, 255, 0],

- [0, 133, 255], [255, 214, 0], [25, 194, 194], [102, 255, 0], [92, 0, 255]

- ]

- class ADE20kDataset(Dataset):

- def __init__(self, image_dir, label_dir):

- self.image_dir = image_dir

- self.label_dir = label_dir

- self.transform = A.Compose([

- A.Resize(448, 448),

- A.HorizontalFlip(),

- A.VerticalFlip(),

- A.Normalize(),

- ToTensorV2(),

- ])

- self.images = sorted(os.listdir(image_dir))

- self.labels = sorted(os.listdir(label_dir))

- assert len(self.images) == len(self.labels), "Images and labels count mismatch!"

- def __len__(self):

- return len(self.images)

- def __getitem__(self, idx):

- img_path = os.path.join(self.image_dir, self.images[idx])

- label_path = os.path.join(self.label_dir, self.labels[idx])

- image = np.array(Image.open(img_path).convert("RGB"))

- label = np.array(Image.open(label_path))

- # Albumentations 需要 (H, W, 3) 和 (H, W)

- transformed = self.transform(image=image, mask=label)

- return transformed['image'], transformed['mask']

- def get_dataloader(data_path, batch_size=4, num_workers=4):

- train_dir = os.path.join(data_path,'images', 'training')

- val_dir = os.path.join(data_path,'images', 'validation')

- trainlabel_dir = os.path.join(data_path, 'annotations','training')

- vallabel_dir = os.path.join(data_path, 'annotations','validation')

- train_dataset = ADE20kDataset(train_dir, trainlabel_dir)

- val_dataset = ADE20kDataset(val_dir, vallabel_dir)

- train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- val_loader = DataLoader(val_dataset, shuffle=False, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- return train_loader, val_loader

COCO2017

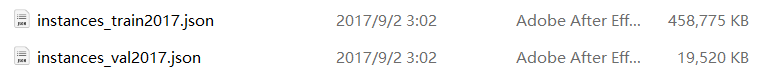

下载

数据集名称:COCO2017

数据集下载地址:COCO - Common Objects in Context

下载我所框出来的三个文件,分别是训练图像,验证图像和标注文件。标注文件是json格式的,后续我们将会进行转换。

数据集简介

COCO2017 数据集是一个大规模的数据集,设计用于物体检测、分割和关键点检测等任务。COCO数据集特别注重“上下文”信息,提供了丰富的标注和不同尺度的物体实例。

数据特点:

- 图像数量:训练集包含118K张图像,验证集包含5K张图像,测试集包含20K张图像。

- 类别:包括80个物体类别,如人、动物、交通工具、家具等。

- 标注:除了物体检测和实例分割标注外,COCO还提供了分割掩码、关键点标注等。

- 复杂性:COCO包含大量的遮挡、重叠物体、不同背景等,适合挑战性强的分割任务。

数据集处理

下载好三个文件之后,我们需要通过annotation中的instances_train2017和instances_val2017来转换获得我们的mask标注文件,我将其转换成了P模式的。

转换代码为:- import os

- import numpy as np

- from pycocotools.coco import COCO

- from pycocotools import mask as maskUtils

- from PIL import Image

- from tqdm import tqdm

- import imgviz

- def coco_to_semantic_mask_pmode(coco_json_path, image_dir, save_mask_dir):

- os.makedirs(save_mask_dir, exist_ok=True)

- coco = COCO(coco_json_path)

- img_ids = coco.getImgIds()

- cat_ids = coco.getCatIds()

- catid2label = {cat_id: i+1 for i, cat_id in enumerate(cat_ids)} # +1保留0为背景

- colormap = imgviz.label_colormap() # 得到颜色映射

- for img_id in tqdm(img_ids):

- img_info = coco.loadImgs(img_id)[0]

- ann_ids = coco.getAnnIds(imgIds=img_id)

- anns = coco.loadAnns(ann_ids)

- h, w = img_info['height'], img_info['width']

- mask = np.zeros((h, w), dtype=np.uint8)

- for ann in anns:

- cat_id = ann['category_id']

- label = catid2label[cat_id]

- rle = coco.annToRLE(ann)

- m = maskUtils.decode(rle)

- mask[m == 1] = label

- # 保存成P模式 (带调色板)

- mask_img = Image.fromarray(mask, mode='P')

- # 设置调色板(需要1维list)

- palette = [v for color in colormap for v in color]

- palette += [0] * (256 * 3 - len(palette)) # 填满256个颜色

- mask_img.putpalette(palette)

- mask_img.save(os.path.join(save_mask_dir, f"{img_info['file_name'].replace('.jpg', '.png')}"))

- print(f"已保存 {len(img_ids)} 张P模式语义分割mask图到 {save_mask_dir}")

- # ==== 用法 ====

- # 训练集

- coco_to_semantic_mask_pmode(

- coco_json_path='../../data/COCO2017/annotations1/instances_train2017.json',

- image_dir='../../data/COCO2017/train2017',

- save_mask_dir='../../data/COCO2017/train2017_labels'

- )

- # 验证集

- coco_to_semantic_mask_pmode(

- coco_json_path='../../data/COCO2017/annotations1/instances_val2017.json',

- image_dir='../../data/COCO2017/val2017',

- save_mask_dir='../../data/COCO2017/val2017_labels'

- )

经过我们数据集处理后的COCO2017的标注就是P模式的- import os

- from PIL import Image

- import albumentations as A

- from albumentations.pytorch.transforms import ToTensorV2

- from torch.utils.data import Dataset, DataLoader

- import numpy as np

- import imgviz

- COCO_CLASSES = [

- "background", "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat",

- "traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep",

- "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase",

- "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket",

- "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich",

- "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch", "potted plant",

- "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave",

- "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear", "hair drier",

- "toothbrush"

- ]

- COCO_COLORMAP = imgviz.label_colormap()

- class COCO2017Dataset(Dataset):

- def __init__(self, image_dir, label_dir):

- self.image_dir = image_dir

- self.label_dir = label_dir

- self.transform = A.Compose([

- A.Resize(448, 448),

- A.HorizontalFlip(),

- A.VerticalFlip(),

- A.Normalize(),

- ToTensorV2(),

- ])

- self.images = sorted(os.listdir(image_dir))

- self.labels = sorted(os.listdir(label_dir))

- assert len(self.images) == len(self.labels), "Images and labels count mismatch!"

- def __len__(self):

- return len(self.images)

- def __getitem__(self, idx):

- img_path = os.path.join(self.image_dir, self.images[idx])

- label_path = os.path.join(self.label_dir, self.labels[idx])

- image = np.array(Image.open(img_path).convert("RGB"))

- label = np.array(Image.open(label_path))

- # Albumentations 需要 (H, W, 3) 和 (H, W)

- transformed = self.transform(image=image, mask=label)

- return transformed['image'], transformed['mask']

- def get_dataloader(data_path, batch_size=4, num_workers=4):

- train_dir = os.path.join(data_path,'train2017')

- val_dir = os.path.join(data_path,'val2017')

- trainlabel_dir = os.path.join(data_path, 'train2017_labels')

- vallabel_dir = os.path.join(data_path, 'val2017_labels')

- train_dataset = COCO2017Dataset(train_dir, trainlabel_dir)

- val_dataset = COCO2017Dataset(val_dir, vallabel_dir)

- train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- val_loader = DataLoader(val_dataset, shuffle=False, batch_size=batch_size, pin_memory=True, num_workers=num_workers)

- return train_loader, val_loader

结语

上述所述的相关数据集就是语义分割任务中常用的数据集了,各个数据集都各有特点:

- VOC2012 更适用于初学者和基本实验。

- COCO2017 和 ADE20k 则适用于大规模、多类别的复杂分割任务。

- CamVid 和 Cityscapes 主要聚焦于自动驾驶和城市环境中的语义分割。

希望上列所述内容对你有所帮助,如果有错误的地方欢迎大家批评指正!

如果你觉得讲的还不错想转载,可以直接转载,不过麻烦指出本文来源出处即可,谢谢!

参考内容

语义分割标签——mask的读取与保存_mask文件-CSDN博客

语义分割数据集:Cityscapes的使用_cityscapes数据集-CSDN博客

ADE20K数据集 - 知乎

图像语意分割Cityscapes训练数据集使用方法详解_图像语意分割训练cityscapes数据集segnet-convnet神经网络详解-CSDN博客

Dataset之CamVid:CamVid数据集的简介、下载、使用方法之详细攻略_camvid数据集下载-CSDN博客

来源:程序园用户自行投稿发布,如果侵权,请联系站长删除

免责声明:如果侵犯了您的权益,请联系站长,我们会及时删除侵权内容,谢谢合作! |